Detecting Fake Agile: DoD's Six Key Questions

Learn how to spot fake Agile practices using six key questions from the US Department of Defense, and discover steps to assess and improve true Agile …

TL;DR; Many projects claim to be agile but are not, so leaders should look for real user involvement, continuous feedback, automation, and cross-functional collaboration to spot genuine agile practices. Key signs of “agile BS” include lack of user interaction, manual processes where automation is possible, and siloed teams. Ask targeted questions about testing, automation, user feedback, and release cycles to verify true agile delivery and ensure teams can adapt based on user needs.

Version 0.4 Last modified: 3 Oct 2018

Agile is a buzzword of software development, and so all DoD software development projects are, almost by default, now declared to be “agile.” The purpose of this document is to provide guidance to DoD program executives and acquisition professionals on how to detect software projects that are really using agile development versus those that are simply waterfall or spiral development in agile clothing (“agile-scrum-fall”).

Agile adherents profess certain key “values” that characterise the culture and approach of agile development. The DIB developed its own guiding maxims that roughly map to these values:

| Agile Value | DIB Maxim |

|---|---|

| Individuals and interactions over processes and tools | “Competence trumps process” |

| Working software over comprehensive documentation | “Minimise time from program launch to deployment of simplest useful functionality” |

| Customer collaboration over contract negotiation | “Adopt a DevSecOps culture for software systems” |

| Responding to change over following a plan | “Software programs should start small, be iterative, and build on success ‒ or be terminated quickly” |

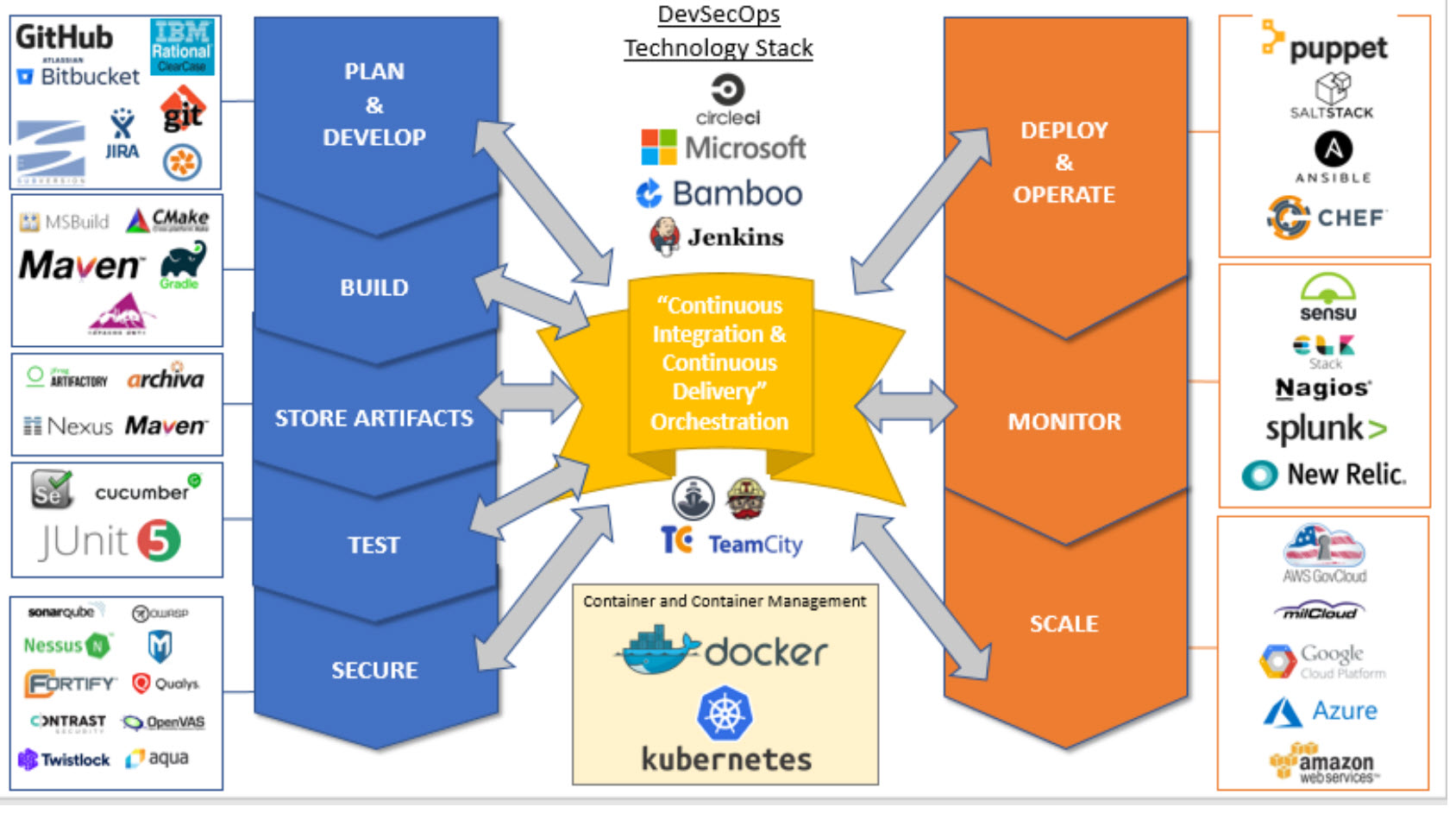

Note: Tools are illustrative; no endorsement implied.

Illustrates modern tooling from plan, develop, build, test, secure, deploy, monitor, and scale.

Illustrates modern tooling from plan, develop, build, test, secure, deploy, monitor, and scale.

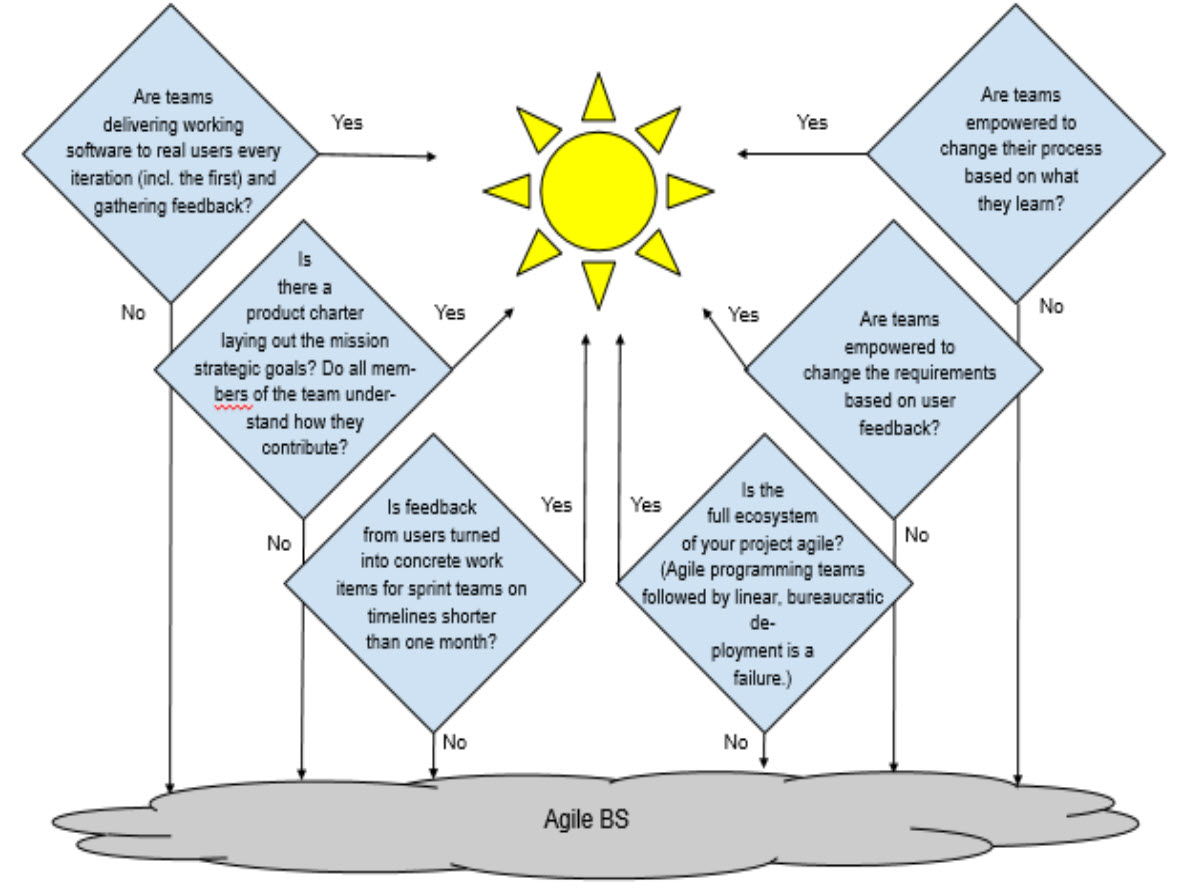

If the team is truly working in an agile way, the answer to all the above should be “yes.”

Decision flow showing how to identify Agile BS: asking if teams deliver software, gather feedback, adapt process and requirements, and operate as part of a full agile ecosystem.

Decision flow showing how to identify Agile BS: asking if teams deliver software, gather feedback, adapt process and requirements, and operate as part of a full agile ecosystem.

Each classification [Concepts, Categories, & Tags] was assigned using AI-powered semantic analysis and scored across relevance, depth, and alignment. Final decisions? Still human. Always traceable. Hover to see how it applies.

If you've made it this far, it's worth connecting with our principal consultant and coach, Martin Hinshelwood, for a 30-minute 'ask me anything' call.

We partner with businesses across diverse industries, including finance, insurance, healthcare, pharmaceuticals, technology, engineering, transportation, hospitality, entertainment, legal, government, and military sectors.

Slaughter and May

NIT A/S

Jack Links

Flowmaster (a Mentor Graphics Company)

Alignment Healthcare

Healthgrades

Big Data for Humans

Milliman

YearUp.org

Lean SA

Emerson Process Management

Slicedbread

Philips

Illumina

ProgramUtvikling

Akaditi

Epic Games

Bistech

Washington Department of Enterprise Services

Nottingham County Council

Washington Department of Transport

Department of Work and Pensions (UK)

Ghana Police Service

New Hampshire Supreme Court

Workday

CR2

Slicedbread

Lean SA

Schlumberger

ALS Life Sciences