A working Test Track Pro Adapter for the TFS Integration Platform

Describes building a working Test Track Pro Adapter for the TFS Integration Platform, detailing code changes, conflict handling, and incremental work …

TL;DR; Step-by-step guide to migrating FogBugz data to TFS 2012 using a custom CSV adapter with the TFS Integration Platform, including setup, mapping, and import tips.

As part of my current engagement I will be moving data from FogBugz via a custom CSV Tip Adapter for the TFS Integration Platform. This an adapter I wrote a while ago to facilitate moving data from Excel to TFS and I just ant to reuse it. The first thing I need is a development environment as I may need to tweak this ancient code.

This post is part of a series of posts that document a Upgrade of TFS 2010 to TFS 2012 with a VSS Migration, Process Template consolidation, Team Project consolidation and a FogBugz migration:

Figure: Finishing up the data consolidation

We completed the upgrade to TFS 2012 and consolidated Process Templates and then consolidated all of the Team Projects to a single collection and now the last part of this marathon of fun is to get all of the existing FogBugz data into TFS. We could conceivably use a simple Excel import, but this leaves us open for a nightmare of a time getting all of the state transitions through.

Do not use the ranger guidance for this. That way lies pain and suffering as it assumes that you need to be able to compile the entire TFS Integration Platform. I just set up the bits I need, so what do we need. Well, first we need to install a couple of things:

Figure: Make sure that you snapshot

As I always use a VM for development I get to use the power of Snapshots to keep my environments clean and working ![]()

Next you need to create a Solution and Project to hold your custom adapter. Use whatever layout that you want, but make sure that your Project has the following references:

Both of which you will find in “C:Program Files (x86)Microsoft Team Foundation Server Integration Tools”. But in order to go through a debug cycle (the TFS Integration Platform has no Unit Tests) you need to provide a little magic.

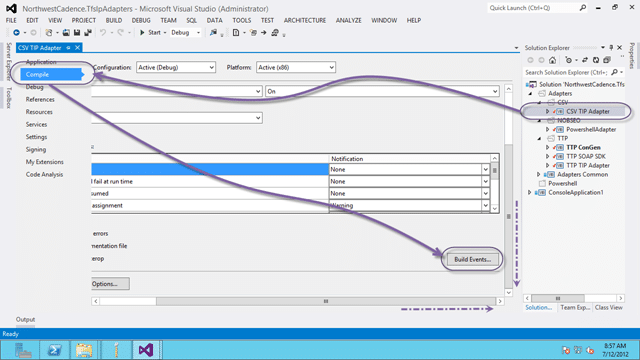

Select “Right Click Project | Compile | Build Events”

Figure: Open the project properties

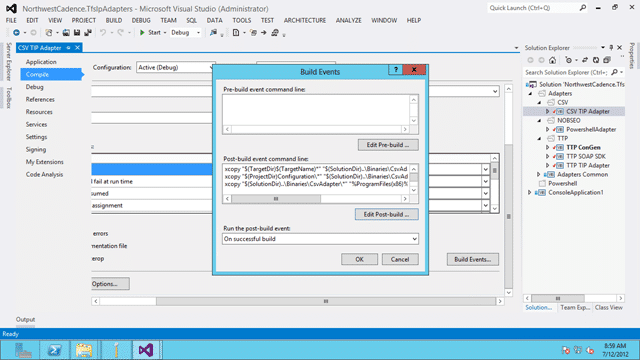

Then in the post build events enter some xcopy statements

Figure: Edit the build events

1xcopy "$(TargetDir)$(TargetName)_" "$(SolutionDir)..BinariesMyAdapterPlugins_" /y

2xcopy "$(ProjectDir)Configuration*" "$(SolutionDir)..BinariesMyAdapterConfigurations*" /y /s

3xcopy "$(SolutionDir)..BinariesMyAdapter*" "%ProgramFiles(x86)%Microsoft Team Foundation Server Integration Tools\*" /y /s

4

5 ```

6

7 **Figure: Add some xcopy statements**

Figure:

Select “Start external Program” and enter the path to the Migration Console

1C:Program Files (x86)Microsoft Team Foundation Server Integration ToolsMigrationConsole.exe

Add a command line argument of the xml config file to run

Add a working directory

1C:Program Files (x86)Microsoft Team Foundation Server Integration Tools

Now when you debug your Class Library it will open MigrationConsole.exe with the correct test configuration and attach to the process allowing you to step through your code.

If you have configured the above steps correctly then all you need is to select the correct configuration file form the UI and it will pre-populate all of the values for you.

Figure: Select the configuration file for your adapter

There is some interesting code in the Configuration file to achieve this, and a way of setting the defaults.

1< ?xml version="1.0" encoding="utf-8" standalone="yes" ?>

Figure: Configuration files can be very detailed

This file represents the data that needs to be mapped. All the CSV Adapter does is get the data into the TFS Integration Platform and the Configuration file does all of the hard work of mapping the data into TFS. If you noticed our crazy EOL and Delimiter you should also note that we have large amounts of history and description that may contain the usual delimiters so we needed something that would be unlikely to be used.

Figure: It will now list your adapter on the left hand side

Now that you can select the adapter we are ready to rock…

The real advantage of this over Excel is that we can bypass the API rules and write data directly into TFS. This allows us to write data directly into a particular state even if that state does not exist. While not good is most circumstances this is invaluable when migrating data, otherwise we need to walk the work item through its states to get it to “Done” or “Closed”.

Figure: Some data may need to be updated to enable saving

To help with this I have created a mapping file, but it is really not that easy. We need to think about a number of things as part of the mapping. What are the old States, when are the new ones and how much do they match up.

Figure: Mapping from State to State

The state mappings are the easiest to mess up so take you time with these. I like to have all of my work items in a working state, but some customers either don’t care, or cant decide what the mapping should be. Don’t get confused into adding lots of redundant states into TFS…. why you might ask?

Well, if you change the states or the workflow for the states you are taking on a little more than just updating the states. What bout the Reporting Services reports that rely on them, or the Excel reports, or even the queries. There is a lot dependant on the states and in changing them you take on all of that maintenance…

I am not saying that you should not change the states (when actually I am ) but you need to consider all of the ramifications.

When importing data from another system I always have data that I would want as part of Areas & Iterations, but getting a decision on the format and mapping at the time would be pretty hard so I tend to prefix all Areas and Iterations with the name of the system that I am pulling the data from. The customer can then move and rearrange after the fact.

Figure: Aggregate fields

To achieve this you can use the Aggregate fields function and merge one or more fields. They even support mappings as well so that you can do some clean-up or manipulation as you go through.

This turned out to be a case of SQL Jujitzu and even better, I did not have to do it. My client provided me with a complete CSV file with a custom delimiter so that I could import it into TFS.

I did have to do a bunch of changes to my CSV adapter as I had not really got it working before. Now that it is working it will work for any tabular delimited data to import. Remembering that it is a Tip adapter and does not directly import history however it is aware of changes. If you write an updated file where the “DeltaDateColumn” field has been modified the data for that Work Item will be reapplied over the top of the old one (yes it will overwrite any changes), but this does allow for a staged migration.

Something that we have been doing is some data transformation as part of the output. We have effectively created a temporary table to hold the data and loaded and manipulated the data into that format for export to CSV, but you can just do a data dump depending on your requirements…

Importing Hierarchy

The CSV Adapter by default does not support hierarchy. It could, but I have not investigated that part of the TFS Integration Platform so I just wrote a small command line utility to do the import from a CSV.

1Console.WriteLine(string.Format("Connecting to {0}", args[0]));

2TfsTeamProjectCollection tfs = new TfsTeamProjectCollection(new Uri(args[0]));

3

4Console.WriteLine(string.Format("Loading {0}", @"c:tempParentChild.csv"));

5StreamReader SR = new StreamReader(@"c:tempParentChild.csv");

6Dictionary> relates = new Dictionary>();

7while (!SR.EndOfStream)

8{

9 string rowstring = SR.ReadLine();

10 string[] row = rowstring.Split(',');

11 if (!relates.ContainsKey(int.Parse(row[1])))

12 {

13 relates.Add(int.Parse(row[1]), new List());

14 }

15 relates[int.Parse(row[1])].Add(int.Parse(row[0]));

16}

17

18WorkItemStore store = tfs.GetService();

19WorkItemCollection wic = store.Query(@"SELECT [System.Id] FROM WorkItems WHERE [System.AreaPath] UNDER 'TestProject2FogBugz' ORDER BY [System.Id]");

20foreach (WorkItem sourcew in wic)

21{

22 // TASKS

23 if (sourcew.Type.Name == "Task")

24 {

25 decimal parent = decimal.Parse((string)sourcew.Fields["TfsMigrationTool.ReflectedWorkItemId"].Value);

26 parent = decimal.Negate(parent);

27

28 WorkItem parentw = store.Query(string.Format(@"SELECT [System.Id] FROM WorkItems WHERE [TfsMigrationTool.ReflectedWorkItemId] = '{0}' ORDER BY [System.Id]", parent))[0];

29 parentw.Open();

30

31 WorkItemLinkTypeEnd linkTypeEnd = store.WorkItemLinkTypes.LinkTypeEnds["Child"];

32 parentw.Links.Add(new RelatedLink(linkTypeEnd, sourcew.Id));

33 parentw.Save();

34

35 Console.WriteLine(parent);

36 }

37 else

38 {

39 int parentIsMe = int.Parse((string)sourcew.Fields["TfsMigrationTool.ReflectedWorkItemId"].Value);

40 sourcew.Open();

41 Console.WriteLine(string.Format("Processing Parent {0}", parentIsMe));

42 if (relates.ContainsKey(parentIsMe))

43 {

44 foreach (int child in relates[parentIsMe])

45 {

46 WorkItem childw = store.Query(string.Format(@"SELECT [System.Id] FROM WorkItems WHERE [TfsMigrationTool.ReflectedWorkItemId] = '{0}' ORDER BY [System.Id]", child))[0];

47 childw.Open();

48

49 WorkItemLinkTypeEnd linkTypeEnd = store.WorkItemLinkTypes.LinkTypeEnds["Child"];

50 sourcew.Links.Add(new RelatedLink(linkTypeEnd, childw.Id));

51 sourcew.Save();

52 Console.WriteLine(string.Format("Adding child if {0} to {1}", childw.Id, parentIsMe));

53 }

54 }

55 }

56}

57Console.ReadKey();

Figure: Crude but effective

For the most part you don’t even need to know the project name as its all in the ID’s.

This is the easiest part now that we have our data format and our configuration but we still had a nightmare getting the TFS Integration Platform to recognise the new Adapter. You need to make sure that you restart the “Windows Service” if you have it running but other than that everything went smoothly.

Each classification [Concepts, Categories, & Tags] was assigned using AI-powered semantic analysis and scored across relevance, depth, and alignment. Final decisions? Still human. Always traceable. Hover to see how it applies.

If you've made it this far, it's worth connecting with our principal consultant and coach, Martin Hinshelwood, for a 30-minute 'ask me anything' call.

We partner with businesses across diverse industries, including finance, insurance, healthcare, pharmaceuticals, technology, engineering, transportation, hospitality, entertainment, legal, government, and military sectors.

Kongsberg Maritime

Workday

Graham & Brown

Emerson Process Management

Lockheed Martin

Sage

Qualco

Genus Breeding Ltd

Boxit Document Solutions

Ericson

ProgramUtvikling

YearUp.org

Bistech

Teleplan

Milliman

Xceptor - Process and Data Automation

Higher Education Statistics Agency

Alignment Healthcare

New Hampshire Supreme Court

Department of Work and Pensions (UK)

Ghana Police Service

Washington Department of Transport

Royal Air Force

Nottingham County Council

Flowmaster (a Mentor Graphics Company)

YearUp.org

Ericson

Milliman

Genus Breeding Ltd

Alignment Healthcare